去除重复行

【问题】

Does anyone know of a python/bash script for removing duplicate lines?

I dumped the contents of an EPROM yesterday & need to find a faster way to remove duplicate data.

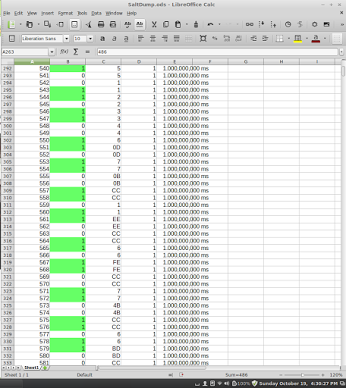

The following columns are sample #, clock (hi or low), 8 bits of data, reset bit, and sample period. I have it in a text file but brought it into a spreadsheet to make it easier to look at and delete lines

【回答】

这个算法应该是从已经分组的数据中去除重复的行,python/bash可以硬编码实现此类算法,但过程比较麻烦。建议试试SPL,它的分组函数支持丰富的选项,可以支持直接从分组中取第一条记录,只要一句即可实现:

A |

|

1 |

=file("eprom.log").import().group@1o(_2) |

A1:部分结果如下:

540 1 5 1 1000000000ms

541 0 5 1 1000000000ms

543 1 1 1 1000000000ms

545 0 2 1 1000000000ms

546 1 3 1 1000000000ms

548 0 4 1 1000000000ms